My OS:TEP Notes

2024-07-01book: https://pages.cs.wisc.edu/~remzi/OSTEP/

This page contains book highlights rather than personal notes.

-

Turning a single CPU (or a small set of them) into a seemingly infinite number of KCPUs and thus allowing many programs to seemingly run at once is what we call virtualizing the CPU

-

Each process accesses its own private virtual address space (sometimes just called its address space), which the OS somehow maps onto the physical memory of the machine.

-

The key difference between a system call and a procedure call is that a system call transfers control (i.e., jumps) into the OS while simultaneously raising the hardware privilege level.

-

the OS can promote the illusion that many virtual CPUs exist when in fact there is only one physical CPU (or a few). This basic technique, known as time sharing of the CPU

-

In early (or simple) operating systems, the loading process(from disk) is done eagerly, i.e., all at once before running the program; modern OSes perform the process lazily, i.e., by loading pieces of code or data only as they are needed during program execution

-

in a cooperative scheduling system, the OS regains control of the CPU by waiting for a system call or an illegal operation of some kind to take place. (Cooperative Approach)

-

A timer device can be programmed to raise an interrupt every so many milliseconds; when the interrupt is raised, the currently running process is halted, and a pre-configured interrupt handler in the OS runs. (Non-Cooperative Approach)

-

A natural question you might have is: how long does something like a context switch take? Or even a system call? For those of you that are curious, there is a tool called lmbench [MS96] that measures exactly those things, as well as a few other performance measures that might be relevant.

-

The turnaround time of a job is defined as the time at which the job completes minus the time at which the job arrived in the system.

-

We define response time as the time from when the job arrives in a system to the first time it is scheduled

-

RR runs a job for a time slice (sometimes called a scheduling quantum)

-

SJF: Shortest Job First

-

STCF: Shortest Time-to-Completion First

-

MLFQ: Multi-level Feedback Queue

-

MLFQ will try to learn about processes as they run, and thus use the history of the job to predict its future behavior

-

MLFQ Rules:

- Rule 1: If Priority(A) > Priority(B), A runs (B doesn't).

- Rule 2: If Priority(A) = Priority(B), A & B run in RR.

- Rule 3: When a job enters the system, it is placed at the highest priority (the topmost queue).

- Rule 4a:

If a job uses up its allotment while running, its priority is reduced (i.e., it moves down one queue). - Rule 4b:

If a job gives up the CPU (for example, by performing an I/O operation) before the allotment is up, it stays at the same priority level (i.e., its allotment is reset). - Rule 4: Once a job uses up its time allotment at a given level (regardless of how many times it has given up the CPU), its priority is reduced (i.e., it moves down one queue).

- Rule 5: After some time period S, move all the jobs in the system to the topmost queue.

-

Of course, the addition of the time period S leads to the obvious question: what should S be set to? John Ousterhout, a well-regarded systems researcher [O11], used to call such values in systems voo-doo constants

-

proportional-share/fair-share: instead of optimizing for turnaround or response time, a scheduler might instead try to guarantee that each job obtain a certain percentage of CPU time

-

we'd like for each job to finish at roughly the same time, but due to the randomness of lottery scheduling, sometimes one job finishes before the other. To quantify this difference, we define a simple fairness metric, F

-

Despite these earlier works in fair-share scheduling, the current Linux approach achieves similar goals in an alternate manner. The scheduler, entitled the Completely Fair Scheduler (CFS)

- Its goal is simple: to fairly divide a CPU evenly among all competing processes

- each process's vruntime increases at the same rate, in proportion with physical (real) time. When a scheduling decision occurs, CFS will pick the process with the lowest vruntime to run next.

- CFS manages this tension through various control parameters. The first is sched latency. CFS uses this value to determine how long one process should run before considering a switch

- A typical sched latency value is 48 (milliseconds); CFS divides this value by the number (n) of processes running on the CPU to determine the time slice for a process, and thus ensures that over this period of time, CFS will be completely fair.

- But what if there are "too many" processes running? Wouldn't that lead to too small of a time slice,…To address this issue, CFS adds another parameter, min granularity, which is usually set to a value like 6 ms. CFS will never set the time slice of a process to less than this value

-

Recent studies have shown that scheduler efficiency is surprisingly important; specifically, in a study of Google datacenters, Kanev et al. show that even after aggressive optimization, scheduling uses about 5% of overall datacenter CPU time

-

Caches are thus based on the notion of locality, of which there are two kinds: temporal locality and spatial locality.

-

The idea behind temporal locality is that when a piece of data is accessed, it is likely to be accessed again in the near future; imagine variables or even instructions themselves being accessed over and over again in a loop. The idea behind spatial locality is that if a program accesses a data item at address x, it is likely to access data items near x as well;

-

writing the data through all the way to main memory is slow, so the system will (usually) do that later.

-

The basic solution is provided by the hardware: by monitoring memory accesses, hardware can ensure that basically the "right thing" happens and that the view of a single shared memory is preserved. One way to do this on a bus-based system (as described above) is to use an old technique known as bus snooping [G83]; each cache pays attention to memory updates by observing the bus that connects them to main memory. When a CPU then sees an update for a data item it holds in its cache, it will notice the change and either invalidate its copy (i.e., remove it from its own cache) or update it (i.e., put the new value into its cache too). Write-back caches, as hinted at above, make this more complicated (because the write to main memory isn't visible until later), but you can imagine how the basic scheme might work.

-

One final issue arises in building a multiprocessor cache scheduler, known as cache affinity [TTG95]. This notion is simple: a process, when run on a particular CPU, builds up a fair bit of state in the caches (and TLBs) of the CPU. ==The next time the process runs, it is often advantageous to run it on the same CPU==, as it will run faster if some of its state is already present in the caches on that CPU. If, instead, one runs a process on a different CPU each time, the performance of the process will be worse, as it will have to reload the state each time it runs (note it will run correctly on a different CPU thanks to the cache coherence protocols of the hardware). Thus, a multiprocessor scheduler should consider cache affinity when making its scheduling decisions, perhaps preferring to keep a process on the same CPU if at all possible.

-

by putting all jobs that need to be scheduled into a single queue; we call this singlequeue multiprocessor scheduling or SQMS for short (==disadvantage: synchronization overhead==).

-

most SQMS schedulers include some kind of affinity mechanism to try to make it more likely that process will continue to run on the same CPU if possible

-

Because of the problems caused in single-queue schedulers, some systems opt for multiple queues, e.g., one per CPU. We call this approach multi-queue multiprocessor scheduling (or MQMS).

-

A disadvantage of MQMS is ==load imbalance== (think of a CPUX with an empty queue, or a small queue compared to other CPUS)

- The obvious answer to this query is to move jobs around, a technique which we (once again) refer to as migration.

- One basic approach is to use a technique known as work stealing [FLR98]. With a work-stealing approach, a (source) queue that is low on jobs will occasionally peek at another (target) queue, to see how full it is. If the target queue is (notably) more full than the source queue, the source will "steal" one or more jobs from the target to help balance load.

- ==If you look around at other queues too often, you will suffer from high overhead and have trouble scaling,==

-

BFS, the only single-queue approach among the three, is also proportional-share, but based on a more complicated scheme known as Earliest Eligible Virtual Deadline First (EEVDF)

-

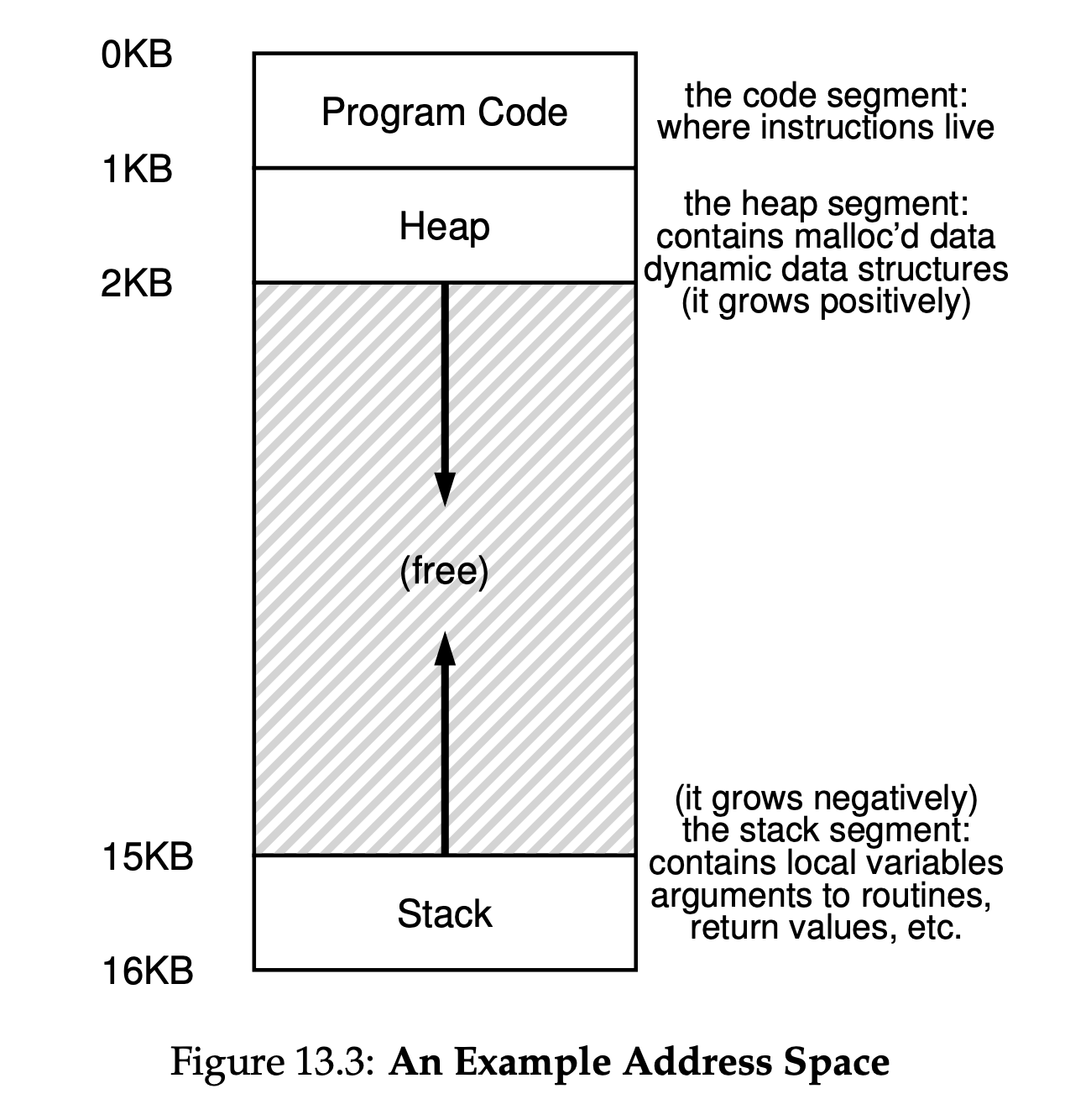

Address Space: The running program's view of memory in the system.

-

Virtual Memory goals:

- transparency: The OS should implement virtual memory in a way that is invisible to the running program. Thus, the program shouldn't be aware of the fact that memory is virtualized

- efficiency: The OS should strive to make the virtualization as efficient as possible, both in terms of time (i.e., not making programs run much more slowly) and space (i.e., not using too much memory for structures needed to support virtualization).

- protection: The OS should make sure to protect processes from one another as well as the OS itself from processes

- One such system call is called brk, which is used to change the location of the program's break: the location of the end of the heap.

-

hardware-based address translation, or just address translation for short. With address translation, the hardware transforms each memory access (e.g., an instruction fetch, load, or store), changing the virtual address provided by the instruction to a physical address where the desired information is actually located.

-

To gain some understanding of hardware-based address translation, we'll first discuss its first incarnation. Introduced in the first time-sharing machines of the late 1950's is a simple idea referred to as base and bounds; the technique is also referred to as dynamic relocation; we'll use both terms interchangeably.

-

physical address = virtual address + base register

-

and because we can move address spaces even after the process has started running, the technique is often referred to as dynamic relocation

-

We should note that the base and bounds registers are hardware structures kept on the chip (one pair per CPU). Sometimes people call the part of the processor that helps with address translation the memory management unit (MMU)

-

Allocators could of course also have the problem of internal fragmentation; if an allocator hands out chunks of memory bigger than that requested, any unasked for (and thus unused) space in such a chunk is considered internal fragmentation (because the waste occurs inside the allocated unit) and is another example of space waste

-

we view physical memory as an array of fixed-sized slots called page frames;

-

To record where each virtual page of the address space is placed in physical memory, the operating system usually keeps a per-process data structure known as a page table.

-

To translate this virtual address that the process generated, we have to first split it into two components: the virtual page number (VPN), and the offset within the page.

-

Physical Frame Number (PFN) aka Physical Page Number (PPN)

-

VPN (Virtual Page Number)

-

Virtual Address = VPN(top bits) + offset

-

log2(address space size) = offset bits -

2^bits = number of virtual pages -

page table → one entry per VPN (PTE)→ PFN

-

PTBR(page table base register)

-

PTE: PTBR + (VPN * sizeof(PTE))

-

————

-

you can see two stacks spread throughout the address space of the process. Thus, any stack-allocated variables, parameters, return values, and other things that we put on the stack will be placed in what is sometimes called thread-local storage, i.e., the stack of the relevant thread.

-

A critical section is a piece of code that accesses a shared resource, usually a variable or data structure.

-

A race condition (or data race [NM92]) arises if multiple threads of execution enter the critical section at roughly the same time

-

"The OS was the first concurrent program"

-

The other major component of any threads library, and certainly the case with POSIX threads, is the presence of a condition variable. Condition variables are useful when some kind of signaling must take place between threads, if one thread is waiting for another to do something before it can continue.

-

Before building any locks, we should first understand what our goals are:

- The first is whether the lock does its basic task, which is to provide mutual exclusion

- The second is fairness. Does each thread contending for the lock get a fair shot at acquiring it once it is free? Another way to look at this is by examining the more extreme case: does any thread contending for the lock starve while doing so, thus never obtaining it?

- The final criterion is performance, specifically the time overheads added by using the lock.

-

Because disabling interrupts does not work on multiple processors, and because simple approaches using loads and stores (as shown above) don't work, system designers started to invent hardware support for locking. The earliest multiprocessor systems, such as the Burroughs B5000 in the early 1960's [M82], had such support; today all systems provide this type of support, even for single CPU systems.

-

You can address the priority inversion problem in a number of ways. In the specific case where spin locks cause the problem, you can avoid using spin locks. More generally, a higher-priority thread waiting for a lower-priority thread can temporarily boost the lower thread's priority, thus enabling it to run and overcoming the inversion, a technique known as priority inheritance. A last solution is simplest: ensure all threads have the same priority.

-

Linux provides

futexsimilar to the Solaris interface but with more in-kernel functionality -

The next synchronization problem we will confront in this chapter is known as the producer/consumer problem, or sometimes as the bounded buffer problem, which was first posed by Dijkstra [D72]. Indeed, it was this very producer/consumer problem that led Dijkstra and his co-workers to invent the generalized ==semaphore==

-

Lampson and Redell call such a condition a covering condition, as it covers all the cases where a thread needs to wake up

-

A semaphore is an object with an integer value that we can manipulate with two routines

-

Many database systems employ deadlock detection and recovery techniques. A deadlock detector runs periodically, building a resource graph and checking it for cycles.

-

The solution, as described by Adya et al. [A+02], is to use an old programming language construct known as a continuation [FHK84]. Though it sounds complicated, the idea is rather simple: basically, record the needed information to finish processing this event in some data structure; when the event happens (i.e., when the disk I/O completes), look up the needed information and process the event.

-

Some devices are connected to the system via a general I/O bus, which in many modern systems would be PCI (or one of its many derivatives); graphics and some other higher-performance I/O devices might be found here. Finally, even lower down are one or more of what we call a peripheral bus, such as SCSI, SATA, or USB. These connect slow devices to the system, including disks, mice, and keyboards.

One question you might ask is: why do we need a hierarchical structure like this? Put simply: physics, and cost. The faster a bus is, the shorter it must be; thus, a high-performance memory bus does not have much room to plug devices and such into it. In addition, engineering a bus for high performance is quite costly. Thus, system designers have adopted this hierarchical approach, where components that demand high performance (such as the graphics card) are nearer the CPU. Lower performance components are further away. The benefits of placing disks and other slow devices on a peripheral bus are manifold; in particular, you can place a large number of devices on it.

-

The CPU connects to an I/O chip via Intel's proprietary DMI (Direct Media Interface), and the rest of the devices connect to this chip via a number of different interconnects. On the right, one or more hard drives connect to the system via the eSATA interface; ATA (the AT Attachment, in reference to providing connection to the IBM PC AT), then SATA (for Serial ATA), and now eSATA (for external SATA)

-

PCIe (Peripheral Component Interconnect Express)

-

Using an interrupt in this case will actually slow down the system: switching to another process, handling the interrupt, and switching back to the issuing process is expensive. Thus, if a device is fast, it may be best to poll; if it is slow, interrupts,

-

Another interrupt-based optimization is coalescing. In such a setup, a device which needs to raise an interrupt first waits for a bit before delivering the interrupt to the CPU. ==While waiting, other requests may soon complete, and thus multiple interrupts can be coalesced into a single interrupt delivery==, thus lowering the overhead of interrupt processing. Of course, waiting too long will increase the latency of a request, a common trade-off in systems.

-

The solution to this problem is something we refer to as Direct Memory Access (DMA). A DMA engine is essentially a very specific device within a system that can orchestrate transfers between devices and main memory without much CPU intervention.

-

The drive consists of a large number of sectors (512-byte blocks), each of which can be read or written. The sectors are numbered from 0 to n − 1 on a disk with n sectors. Thus, we can view the disk as an array of sectors; 0 to n − 1 is thus the address space of the drive.

-

Multi-sector operations are possible; indeed, many file systems will read or write 4KB at a time (or more). However, when updating the disk, the only guarantee drive manufacturers make is that a single 512-byte write is atomic (i.e., it will either complete in its entirety or it won't complete at all); thus, if an untimely power loss occurs, only a portion of a larger write may complete (sometimes called a torn write).

-

On writes, the drive has a choice: should it acknowledge the write has completed when it has put the data in its memory, or after the write has actually been written to disk? The former is called write back caching (or sometimes immediate reporting), and the latter write through.

-

Redundant Array of Inexpensive Disks better known as RAID

-

We now consider three important RAID designs: RAID Level 0 (striping), RAID Level 1 (mirroring), and RAID Levels 4/5 (parity-based redundancy).

-

Thus, determining the "best" chunk size is hard to do, as it requires a great deal of knowledge about the workload presented to the disk system

-

Let us now evaluate the capacity, reliability, and performance of striping. From the perspective of capacity, it is perfect: given N disks each of size B blocks, striping delivers N ·B blocks of useful capacity. From the standpoint of reliability, striping is also perfect, but in the bad way: any disk failure will lead to data loss. Finally, performance is excellent: all disks are utilized, often in parallel, to service user I/O requests.

-

consistent-update problem is solved with a WAL Write Ahead Log, usually this log is in a non-volatile RAM(battery backed) to avoid the high cost of logging to disk.

-

For historical reasons, the low-level name of a file is often referred to as its inode number (i-number).

-

Interestingly, this sequence does not guarantee everything that you might expect; in some cases, you also need to fsync() the directory that contains the file foo. Adding this step ensures not only that the file itself is on disk, but that the file, if newly created, also is durably a part of the directory. Not surprisingly, this type of detail is often overlooked, leading to many application-level bugs

-

In 1974, McPhee noticed a problem in computer systems. Specifically, McPhee noted that "... if there exists a time interval between a validity-check and the operation connected with that validity-check, [and,] through multitasking, the validity-check variables can deliberately be changed during this time interval, resulting in an invalid operation being performed by the control program." We today call this the Time Of Check To Time Of Use (TOCTTOU)

-

In most UNIX file systems, the root inode number is 2.